存储

1. 简介

在k8s中,存储层用来持久化数据文件,避免故障转移后数据丢失。k8s存储层使用的文件系统并非固定统一的,主要使用的分布式文件系统有NFS、GlusterFS、CephFS、Longhorn。

2. 环境准备

NFS(网络文件系统)是简单通用的分布式文件系统, 下面通过搭建NFS服务器提供共享存储。

2.1 安装NFS

所有节点执行:

yum -y install nfs-utils2.2 配置NFS

- 在主节点暴露/nfs/data文件夹:

[root@node101 ~]# mkdir -p /nfs/data

## *表示任何主机都可以访问,insecure表示可以通过不安全的方式

[root@node101 ~]# echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

[root@node101 ~]# systemctl enable rpcbind --now

[root@node101 ~]# systemctl enable nfs-server --now

## 配置立即生效

[root@node101 ~]# exportfs -r- 在从节点上Node102,Node103执行:

## 172.17.218.217为主节点IP, 查看当前可挂载的信息

[root@node102 ~]# showmount -e 172.17.218.217

Export list for 172.17.218.217:

/nfs/data *

## 同样创建 /nfs/data目录

[root@node102 ~]# mkdir -p /nfs/data

## 挂在远程目录,同步到本地的/nfs/data目录

[root@node102 ~]# mount -t nfs 172.17.218.217:/nfs/data /nfs/data- 测试验证:

[root@node101 ~]# cd /nfs/data

[root@node101 data]# ls

[root@node101 data]# touch 1.txt

[root@node102 ~]# ls /nfs/data

1.txt

[root@node103 ~]# ls /nfs/data

1.txt3. 原生方式挂载数据

- 原生方式不支持自动创建目录,需要手动创建目录nginx-pv用于后面Pod卷挂载:

[root@node101 ~]# mkdir -p /nfs/data/nginx-pv- 编写native-mount.yaml文件:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-pv

namespace: zk-dev

labels:

app: nginx-pv

spec:

replicas: 1

selector:

matchLabels:

app: nginx-pv

template:

metadata:

name: nginx-pv

labels:

app: nginx-pv

spec:

containers:

- image: docker.io/library/nginx:1.21.5

name: nginx-pv

imagePullPolicy: IfNotPresent

# 声明卷在容器中的挂载位置

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html

# 设置为Pod提供的卷

volumes:

- name: html

nfs:

server: 172.17.218.217

path: /nfs/data/nginx-pv

restartPolicy: Always- 应用native-mount.yaml文件:

[root@node101 ~]# kubectl apply -f native-mount.yaml

deployment.apps/nginx-pv created- 编写index.html文件:

[root@node101 ~]# cd /nfs/data/nginx-pv/

[root@node101 nginx-pv]# ls

[root@node101 nginx-pv]# echo "<html><head><meta><title>index</title></head><body><h2>native-mount</h2></body></html>" > index.html- 访问nginx-pv,发现首页不是默认的nginx首页,而是我们自定义的:

[root@node101 nginx-pv]# kubectl get pod -owide -n zk-dev

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pv-799c89db64-xpdq5 1/1 Running 0 15s 192.168.111.139 node103 <none> <none>

[root@node101 nginx-pv]# curl 192.168.111.139

<html><head><meta><title>index</title></head><body><h2>native-mount</h2></body></html>4. PV和PVC

使用原生挂载有几个问题,比如不会自动创建目录,Pod删除后不会自动情理挂载目录,对磁盘使用空间没有限制。推荐使用挂载卷声明的方式。

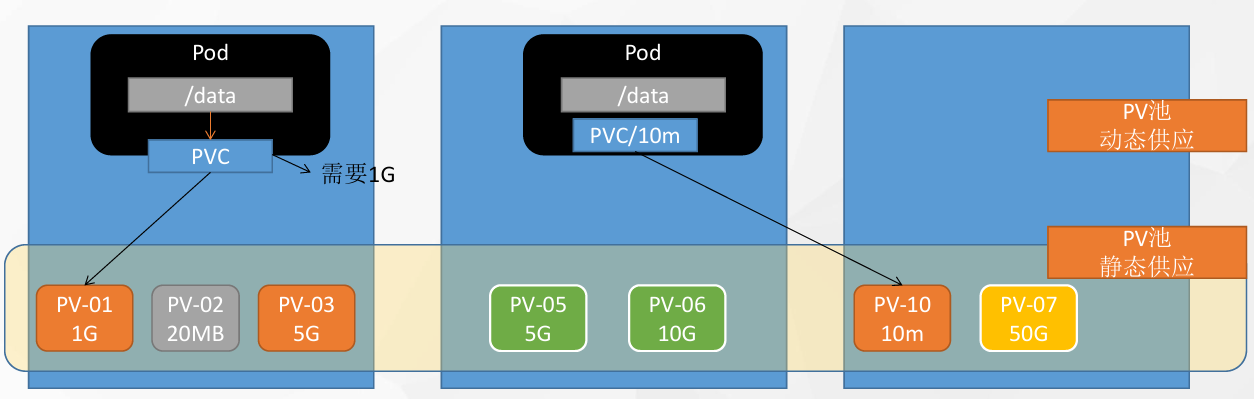

- PV: 挂载卷(Persistent Volume),将应用需要持久化的数据保存到指定位置。

- PVC: 持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格。

4.1 创建PV池

在/nfs/data/目录下创建目录01、02、03:

在/nfs/data/目录下创建目录01、02、03:

[root@node101 ~]# mkdir -p /nfs/data/01 /nfs/data/02 /nfs/data/03

[root@node101 ~]# ls /nfs/data

01 02 03 1.txt nginx-pv4.2 创建PV

编写pvs.yaml文件,内容如下:

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10m

# 读写模式:多节点的可读可写

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 172.17.218.217

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1g

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 172.17.218.217

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-50m

spec:

capacity:

storage: 50m

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 172.17.218.217应用pv规则:

[root@node101 ~]# kubectl apply -f pvs.yaml

persistentvolume/pv01-10m created

persistentvolume/pv02-1g created

persistentvolume/pv03-50m created查看系统中资源:

[root@node101 ~]# kubectl get persistentvolume

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pv01-10m 10m RWX Retain Available nfs <unset> 9m34s

pv02-1g 1Gi RWX Retain Available nfs <unset> 2m28s

pv03-50m 50m RWX Retain Available nfs <unset> 9m34s4.3 创建PVC和绑定

- 创建pvc-nginx.yml文件, 用来创建PVC:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 20m

storageClassName: nfs- 应用pvc:

[root@node101 ~]# kubectl apply -f pvc-nginx.yml

Warning: spec.resources.requests[storage]: fractional byte value "20m" is invalid, must be an integer

persistentvolumeclaim/nginx-pvc created- 查看当前PVC绑定的资源

[root@node101 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pv01-10m 10m RWX Retain Available nfs <unset> 5h39m

pv02-1g 1Gi RWX Retain Available nfs <unset> 5h32m

pv03-50m 50m RWX Retain Bound default/nginx-pvc nfs <unset> 5h39m发现使用的是pv03-50m和当前的PVC绑定了。

4. 创建Pod绑定PVC

编写pod-pvc.yml文件:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy-pvc

labels:

app: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- name: nginx

image: docker.io/library/nginx:1.21.5

volumeMounts:

- mountPath: /usr/share/nginx/html

name: html

volumes:

- name: html

# 指明使用pvc

persistentVolumeClaim:

claimName: nginx-pvc- 应用当前的Deploy:

[root@node101 ~]# kubectl apply -f deploy-pvc.yml

deployment.apps/nginx-deploy-pvc created

[root@node101 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-pvc-64bbb4bb9b-hzx8j 1/1 Running 0 2m20s 192.168.111.143 node103 <none> <none>

nginx-deploy-pvc-64bbb4bb9b-p4kf8 1/1 Running 0 2m20s 192.168.200.103 node102 <none> <none>- 验证当前的Deploy:

## 在/nfs/data/03的挂载目录下添加index.html

[root@node101 ~]# cd /nfs/data/03

[root@node101 03]# echo "<html><head><title>index</title></head><body></body></html>" > index.html

[root@node101 03]# curl 192.168.111.143

<html><head><title>index</title></head><body></body></html>

[root@node101 03]# curl 192.168.200.103

<html><head><title>index</title></head><body></body></html>可以看到index.html在两个Pod上都不再是默认的nginx的页面,而是读取的/nfs/data/03的index.html内容。

5. ConfigMap

如果仅仅需要持久化存储配置文件,k8s提供了ConfigMap,实现配置的集中管理和动态更新。下面以redis的配置文件为例说明。

5.1 创建配置集

创建redis.conf文件:

requirepass 123456创建redis-conf的配置集:

kubectl create cm redis-conf --from-file=redis.conf

[root@node101 ~]# kubectl get cm

NAME DATA AGE

kube-root-ca.crt 1 26d

redis-conf 1 84sConfigMap底层使用Etcd,所以ConfigMap由key-value对组成,比如键为文件名,值为文件内容。还可以通过yaml文件创建:

apiVersion: v1

kind: ConfigMap

metadata:

name: reids-config

data:

# 键值对形式

"redis.conf": "requirepass 123456"5.2 创建Pod

编写StatefulSet的yml文件:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-dep

labels:

app: redis-dep

spec:

replicas: 1

selector:

matchLabels:

app: redis-dep

template:

metadata:

name: redis-dep

labels:

app: redis-dep

spec:

containers:

- name: redis-dep

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/redis:7.4.5-alpine

imagePullPolicy: IfNotPresent

command:

- "redis-server"

args:

- "/redis/conf/redis.conf"

env:

- name: MASTER

value: "true"

livenessProbe: # 存活探针

exec:

command: ["redis-cli", "ping"]

initialDelaySeconds: 120

periodSeconds: 30

ports:

- containerPort: 6379

# 挂载两个目录

volumeMounts:

- mountPath: "/data"

name: "data"

- mountPath: "/redis/conf"

name: "redis-config"

volumes:

- name: data

# 表示临时目录,交给k8s任意分配

emptyDir: {}

- name: "redis-config"

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

restartPolicy: Always

serviceName: redis-service部署中间件redis:

[root@node101 ~]# kubectl apply -f cm-deploy.yml

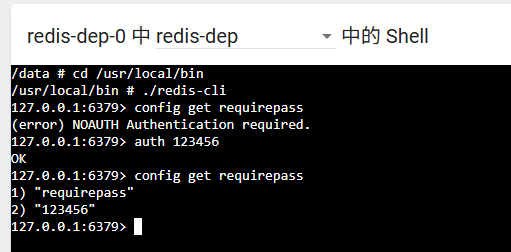

statefulset.apps/redis-dep created在k8s的dashboard页面上,查看Pod的内部redis情况: ConfigMap还提供配置热更新能力,编辑redis-conf的ConfigMap:

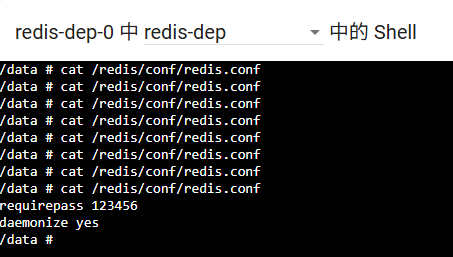

ConfigMap还提供配置热更新能力,编辑redis-conf的ConfigMap:

## 添加内容:daemonize yes

[root@node101 ~]# kubectl edit cm redis-conf

configmap/redis-conf edited在redis-dep-0的Pod中查看redis.conf文件,发现已经更新为最新的(大概过了30s): 检查redis,发现配置虽然变了但是没有生效:

检查redis,发现配置虽然变了但是没有生效:

6. Secret

Secret对象类型用来保存敏感信息,例如密码、OAuth令牌和SSH密钥。 将这些信息放在secret中比放在Pod的定义或者容器镜像中来说更加安全和灵活。

6.1 创建Secret

[root@node101 ~]# kubectl create secret docker-registry jiebaba-repository \

--docker-username=jiebaba01 \

--docker-password=123456789 \

--docker-email=jiebaba01@gmail.com

secret/jiebaba-repository created6.2 查看Secret

[root@node101 ~]# kubectl get secret

NAME TYPE DATA AGE

jiebaba-repository kubernetes.io/dockerconfigjson 1 63s