HDFS—数据均衡和黑白名单

生产环境,由于硬盘空间不足,往往需要增加一块硬盘。刚加载的硬盘没有数据时,可以执行磁盘数据均衡命令。(Hadoop3.x新特性)

1. 磁盘间数据均衡

1.1 生成均衡计划(我目前只有一块磁盘,不会生成计划)

sh

[jack@hadoop103 software]$ hdfs diskbalancer -plan hadoop103

2024-01-23 15:25:51,924 INFO balancer.NameNodeConnector: getBlocks calls for hdfs://hadoop102:8020 will be rate-limited to 20 per second

2024-01-23 15:25:55,522 INFO planner.GreedyPlanner: Starting plan for Node : hadoop103:9867

2024-01-23 15:25:55,522 INFO planner.GreedyPlanner: Compute Plan for Node : hadoop103:9867 took 30 ms

2024-01-23 15:25:55,524 INFO command.Command: No plan generated. DiskBalancing not needed for node: hadoop103 threshold used: 10.0

No plan generated. DiskBalancing not needed for node: hadoop103 threshold used: 10.0

[jack@hadoop103 software]$1.2 执行均衡计划

sh

[jack@hadoop103 software]$ hdfs diskbalancer -execute hadoop103.plan.json1.3 查看当前均衡任务的执行情况

sh

[jack@hadoop103 software]$ hdfs diskbalancer -query hadoop1031.4 取消均衡任务

sh

[jack@hadoop103 software]$ hdfs diskbalancer -cancel hadoop103.plan.json2. 黑白名单

白名单:表示在白名单的主机IP地址可以,用来存储数据。

企业中:配置白名单,可以尽量防止黑客恶意访问攻击。

2.1 创建whitelist和blacklist文件

- 创建黑白名单

sh

[jack@hadoop103 software]$ touch whitelist blacklist

[jack@hadoop102 hadoop]$ echo hadoop102>whitelist

[jack@hadoop102 hadoop]$ echo hadoop103>>whitelist- hdfs-site.xml配置文件

xml

<!-- 白名单 -->

<property>

<name>dfs.hosts</name>

<value>/opt/module/hadoop-3.3.6/etc/hadoop/whitelist</value>

</property>

<!-- 黑名单 -->

<property>

<name>dfs.hosts.exclude</name>

<value>/opt/module/hadoop-3.3.6/etc/hadoop/blacklist</value>

</property>- 分发配置文件

sh

[jack@hadoop104 etc]$ xsync hadoop- 第一次添加白名单必须重启集群,不是第一次,只需要刷新NameNode节点即可

sh

[jack@hadoop102 hadoop-3.3.6]$ hadoop_helper stop

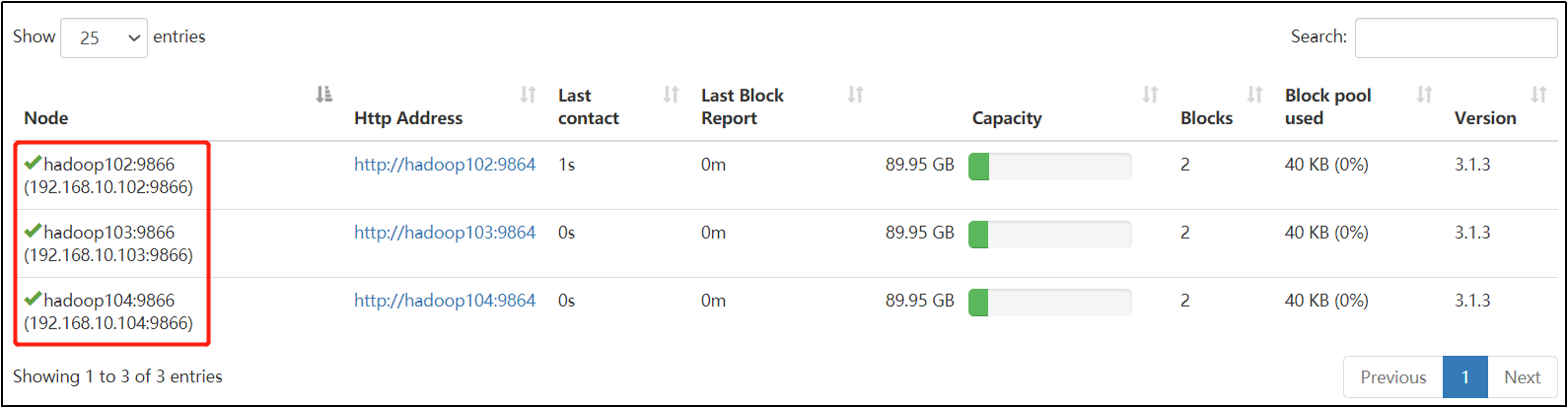

[jack@hadoop102 hadoop-3.3.6]$ hadoop_helper start- 在web浏览器上查看DN,http://hadoop102:9870/dfshealth.html#tab-datanode

- 在hadoop104上执行上传数据数据失败

sh

[jack@hadoop104 hadoop-3.3.6]$ hadoop fs -put NOTICE.txt /- 二次修改白名单,增加hadoop104

sh

[jack@hadoop102 hadoop]$ vim whitelist

修改为如下内容

hadoop102

hadoop103

hadoop104- 刷新NameNode

sh

[jack@hadoop102 hadoop-3.3.6]$ hdfs dfsadmin -refreshNodes

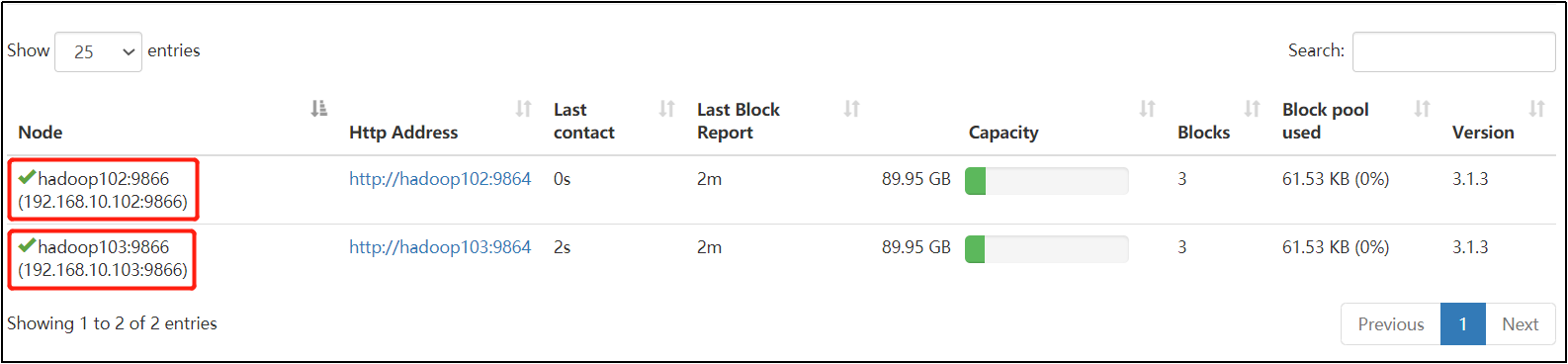

Refresh nodes successful- 在web浏览器上查看DN,http://hadoop102:9870/dfshealth.html#tab-datanode